Nike Air Max 720 Experiment

Details

- Date : Februray 25, 2019

- Category : GUI Applications

- Client : Nike

- Organization : Hisseli Harikalar

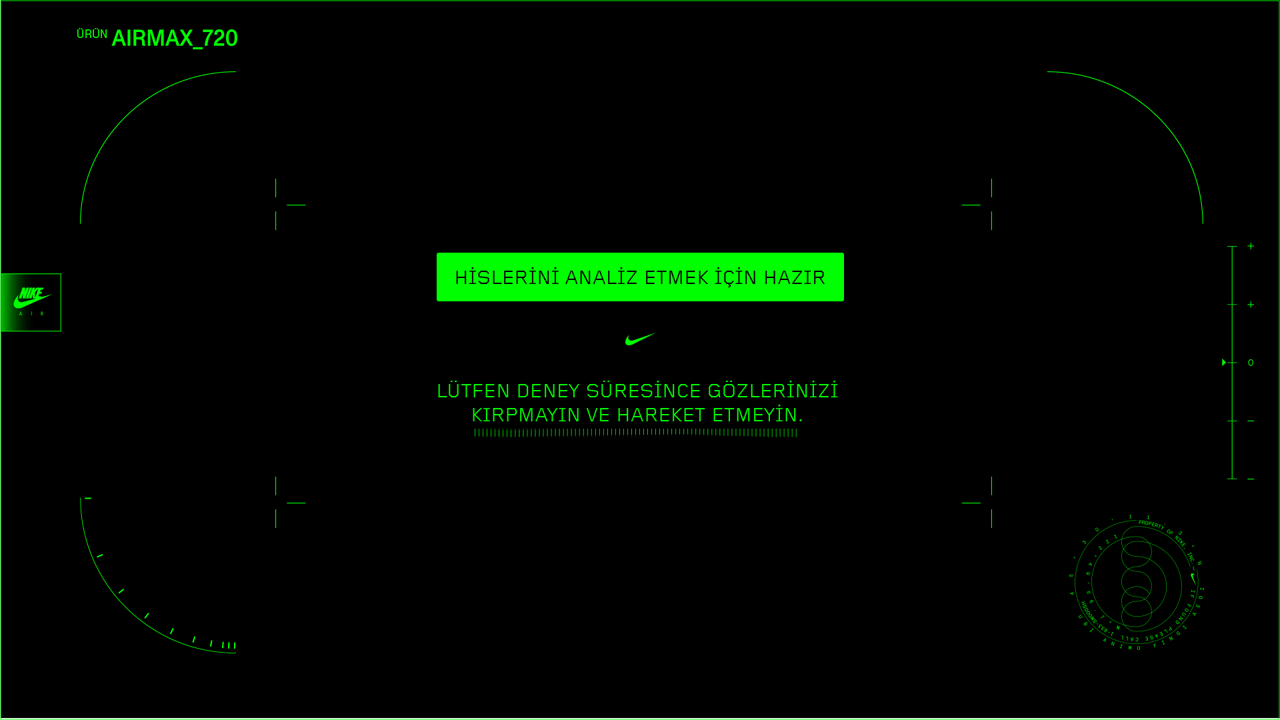

UI Application:

All the UI designs came from the Awesome Bros Company and they managed the work. The GUI application has been developed on Unity3D using the C# programming language by me.

UI Design Link

The software gets the personal information of the participants like name, e-mail address, gender, and age. Then the software takes a 3D depth scanning image of the participant's face. This face depth data used in the video that the software generated in runtime for the participants why they share on social media. This 3D scanning process was made with a Microsoft Kinect Sensor.

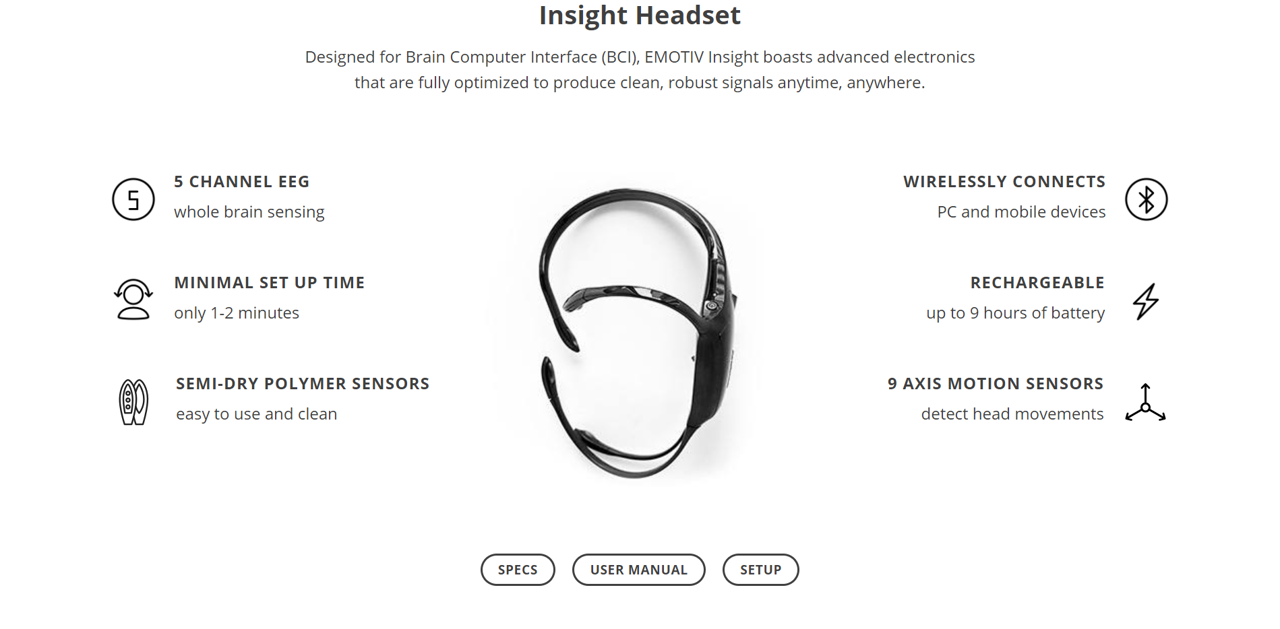

After the participant entered personal information and scanned him/her face with the software, the experience starts. TheEMOTIV Insight 5 Channel Mobile Brainwear® has been already placed on the position on the head. While the participants give the information and take a 3D shot, the headset has been started to take the EEG data and make sense of it.

During the experiment time. All the data comes from the participant was recorded. The raw EEG data and the data evaluated like Focus, Stress Interest, Excitement, Engagement, Relaxation was recorded altogether.

A prepared video was shown to the participant in this experiment. Actually, we recorded the data while the participant was watching this video.

You can find the video that the participants watched during our experience below :

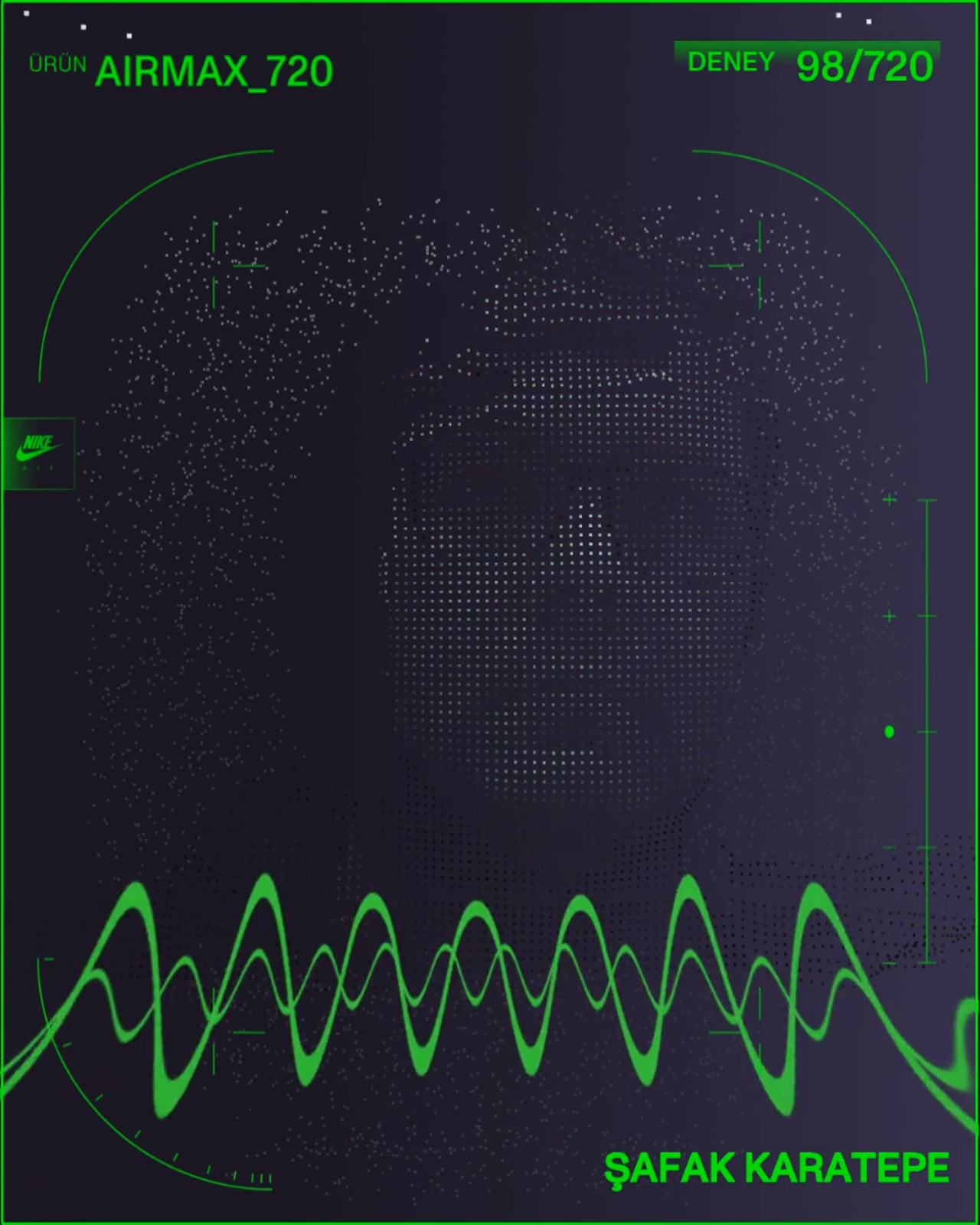

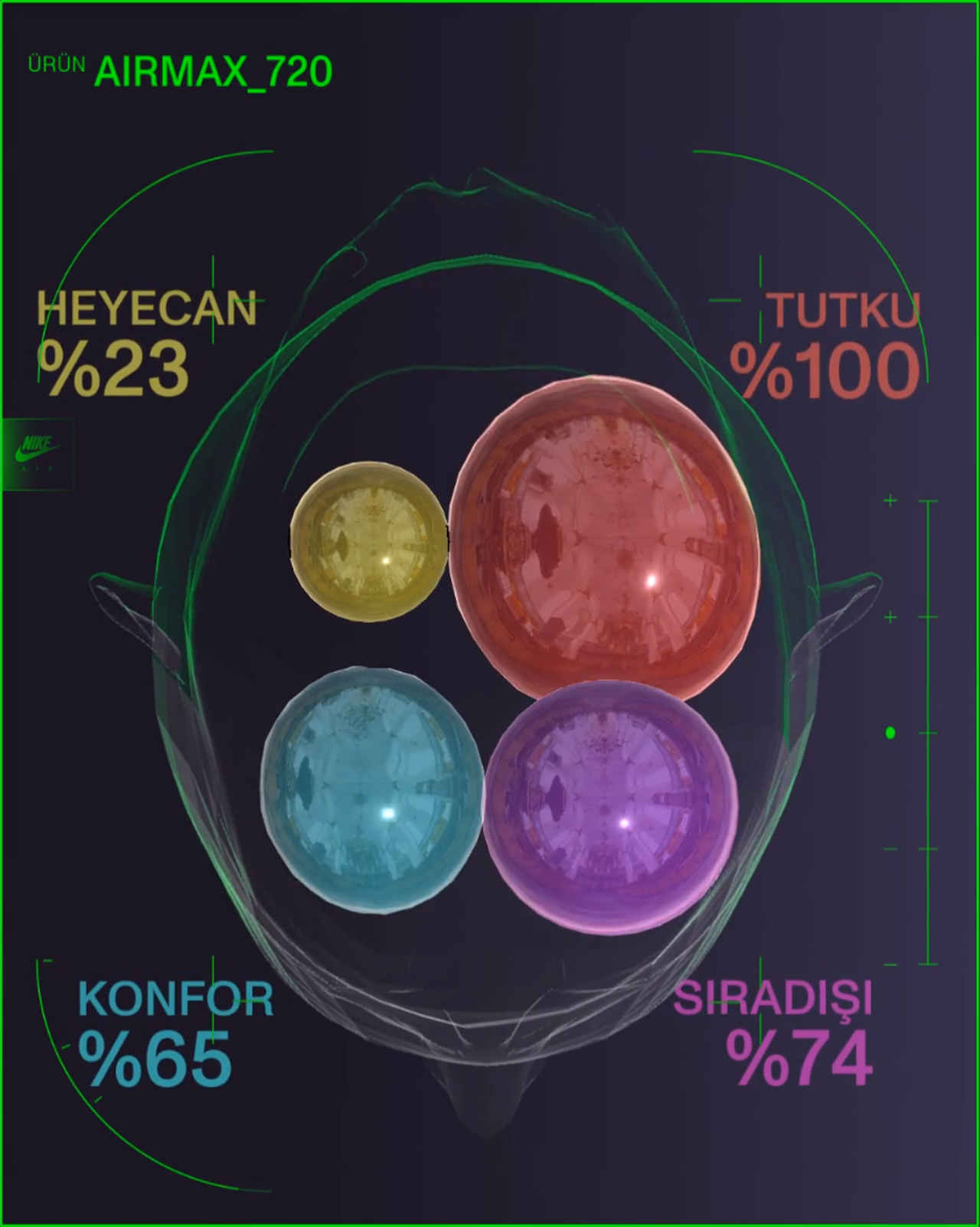

After the recording process, the software starts the 3D rendering process to prepare the video to give it to the participant as a memory from this experiment. These videos prepared as unique videos for every single person. The face 3D scanning shots were taken personally and also there is a special part of the video which is the balls of feelings bouncing inside the head. These feelings data were taken from your EEG data and they were totally rendered for the participant, and there is no way to prepare the same one again because the graphical and physical engine generates these softballs in real-time and they bounces in a simulation. Even if we create the same balls with the same sizes in the same places, the movements and the 3D rendering results would be different from the first one.

The rendered output videos were created in two different sizes, for a post and a story format. The software generates the videos in realtime and makes a 3D rendering process after the experiment done.

Post Version Example :

Story Version Example :

Backend Management

After all these 3D rendering processes and the data recording, all the generated videos and the data files were uploaded to the server naming by a unique id. the system worked not only in one place. there were two different setups working at the same time. So this multiple location usage occurred a requirement of data management on the server-side. At the beginning of all the experiment sections, the client GUI application gets a unique id from the server and basically, book the id first.

Data Visualization

These all data that contains the raw EEG and the instant feelings, taken by the world-wide known digital artist Refik Anadol. Refik Anadol turned these important data into a digital sculpture and the work was staged on the windows of every big Nike store and also the iconic places in İstanbul.

Related Posts

Check out my all posts-

January 9, 2020

We have used special mobile devices to get the participants' brain EEG data of the frontal lobe. The backend software an...

Category GUI APPLICATIONS

-

March 3, 2019

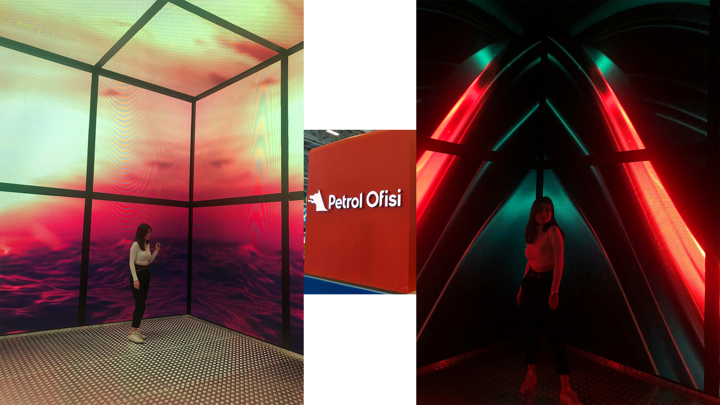

The Cube is designed to make the participants experience the immersive 360 movie at a fair. The area is 6m x 6m and 4m i...

Category 3D MODELLING

-

December 1, 2018

The digital penalty product works with the Microsoft Kinect Sensor which is an infrared detector of the human skeleton. ...

Category KINECT SENSOR APPLICATIONS

Comments

2/25/2019

utku olcar

Data Visualization, EEG Device, Emotiv, Event Technology, Kinect Sensor Game Programming, GUI Applications, Kinect Sensor Applications

0 Comments